Stanford University is so

startlingly paradisial, so fragrant and sunny, it’s as if you could eat

from the trees and live happily forever. Students ride their bikes

through manicured quads, past blooming flowers and statues by Rodin, to

buildings named for benefactors like Gates, Hewlett, and Packard.

Everyone seems happy, though there is a well-known phenomenon called the

“Stanford duck syndrome”: students seem cheerful, but all the while

they are furiously paddling their legs to stay afloat. What they are

generally paddling toward are careers of the sort that could get their

names on those buildings. The campus has its jocks, stoners, and poets,

but what it is famous for are budding entrepreneurs, engineers, and

computer aces hoping to make their fortune in one crevasse or another of

Silicon Valley.

Innovation comes from myriad sources, including

the bastions of East Coast learning, but Stanford has established itself

as the intellectual nexus of the information economy. In early April,

Facebook acquired the photo-sharing service Instagram, for a billion

dollars; naturally, the co-founders of the two-year-old company are

Stanford graduates in their late twenties. The initial investor was a

Stanford alumnus.

The campus, in fact, seems designed to nurture

such success. The founder of Sierra Ventures, Peter C. Wendell, has been

teaching Entrepreneurship and Venture Capital part time at the business

school for twenty-one years, and he invites sixteen venture capitalists

to visit and work with his students. Eric Schmidt, the chairman of

Google, joins him for a third of the classes, and Raymond Nasr, a

prominent communications and public-relations executive in the Valley,

attends them all. Scott Cook, who co-founded Intuit, drops by to talk to

Wendell’s class. After class, faculty, students, and guests often pick

up lattes at Starbucks or cafeteria snacks and make their way to outdoor

tables.

On a sunny day in February, Evan Spiegel had an

appointment with Wendell and Nasr to seek their advice. A lean

mechanical-engineering senior from Los Angeles, in a cardigan, T-shirt,

and jeans, Spiegel wanted to describe the mobile-phone application,

called Snapchat, that he and a fraternity brother had designed. The idea

came to him when a friend said, “I wish these photos I am sending this

girl would disappear.” As Spiegel and his partner conceived it, the app

would allow users to avoid making youthful indiscretions a matter of

digital permanence. You could take pictures on a mobile device and share

them, and after ten seconds the images would disappear.

Spiegel

needed some business advice from campus mentors. He and his partner

already had forty thousand users and were maxing out their credit cards.

If they reached a million customers, the cost of their computer servers

would exceed twenty thousand dollars per month. Spiegel told Wendell

and Nasr that he needed investment money but feared going to a

venture-capital firm, “because we don’t want to lose control of the

company.” When Wendell asked if he’d like an introduction to the people

at Twitter, Spiegel said that he was afraid that they might steal the

idea. Wendell and Nasr suggested a meeting with Google’s venture-capital

arm. Spiegel agreed, Nasr arranged it, and Spiegel and Google are now

talking.

Spiegel

knows that mentors like Wendell will play an important part in helping

him to realize his dreams for the mobile app. “I had the opportunity to

sit in Peter’s class as a sophomore,” Spiegel says. “I was sitting next

to Eric Schmidt. I was sitting next to Chad Hurley, from YouTube. I

would go to lunches after class and listen to these guys talk. I met

Scott Cook, who’s been an incredible mentor.” His faculty adviser, David

Kelley, the head of the school of design, put him in touch with

prospective angel investors.

If the Ivy League was the breeding

ground for the élites of the American Century, Stanford is the farm

system for Silicon Valley. When looking for engineers, Schmidt said,

Google starts at Stanford. Five per cent of Google employees are

Stanford graduates. The president of Stanford, John L. Hennessy, is a

director of Google; he is also a director of Cisco Systems and a

successful former entrepreneur. Stanford’s Office of Technology

Licensing has licensed eight thousand campus-inspired inventions, and

has generated $1.3 billion in royalties for the university. Stanford’s

public-relations arm proclaims that five thousand companies “trace their

origins to Stanford ideas or to Stanford faculty and students.” They

include Hewlett-Packard, Yahoo, Cisco Systems, Sun Microsystems, eBay,

Netflix, Electronic Arts, Intuit, Fairchild Semiconductor, Agilent

Technologies, Silicon Graphics, LinkedIn, and E*Trade.

John

Doerr, a partner at the venture-capital firm Kleiner Perkins Caufield

& Byers, which bankrolled such companies as Google and Amazon,

regularly visits campus to scout for ideas. He describes Stanford as

“the

germplasm for innovation. I can’t imagine Silicon Valley without Stanford University.”

Leland

Stanford was a Republican governor and senator in the late nineteenth

century, who made a fortune from the Central Pacific and Southern

Pacific railroads, which he had helped to found. Stout and bearded, he

could be typecast, like Gould, Morgan, and Vanderbilt, as a robber

baron. Without knowing it, this man of the industrial revolution spent

part of his legacy establishing a center for what would become the Age

of Innovation. After his only child, Leland, Jr., died, of typhoid

fever, at fifteen, Stanford and his wife, Jane, bequeathed more than

eight thousand acres of farmland, thirty-five miles south of San

Francisco, to found a university in their son’s name. They hired

Frederick Law Olmsted, who designed Central Park, to create an open

campus with no walls, vast gardens and thousands of palm and Coast Live

Oak trees, and California mission-inspired sandstone buildings with

red-tiled roofs. Today, the campus extends from Palo Alto to Woodside

and Portola Valley, spanning two counties, three Zip Codes, and six

government jurisdictions.

Stanford University opened its doors in

1891. Jane and Leland Stanford said in their founding grant that the

university, rather than becoming an ivory tower, would “qualify its

students for personal success, and direct usefulness in life.” From its

early days, engineers and scientists attracted government and corporate

research funds as well as venture capital for start-ups, first for

innovations in radio and broadcast media, then for advances in

electronics, microprocessing, medicine, and digital technology. One of

the first big tech companies in Silicon Valley—Federal Telegraph, which

produced radios—was started by a young Stanford graduate in 1909. The

university’s first president, David Starr Jordan, was an angel investor.

Frederick Terman, an engineer who joined the faculty in 1925,

became the dean of the School of Engineering after the Second World War

and the provost in 1955. He is often called “the father of Silicon

Valley.” In the thirties, he encouraged two of his students, William

Hewlett and David Packard, to construct in a garage a new line of audio

oscillators that became the first product of the Hewlett-Packard

Company.

Terman nurtured start-ups by creating the Stanford

Industrial Park, which leased land to tech firms like Hewlett-Packard;

today, the park is home to about a hundred and fifty companies. He

encouraged his faculty to serve as paid consultants to corporations, as

he did, to welcome tech companies on campus, and to persuade them to

subsidize research and fellowships for Stanford’s brightest students.

William

F. Miller, a physicist, was the last Stanford faculty member recruited

by Terman, and he rose to become the university’s provost. Miller, who

is now eighty-six and an emeritus professor at Stanford’s business

school, traces the symbiotic relationship between Stanford and Silicon

Valley to Stanford’s founding. “This was kind of the Wild West,” he

said. “The gold rush was still on. Custer’s Last Stand was only nine

years before. California had not been a state very long—roughly, thirty

years. People who came here had to be pioneers. Pioneers had two

qualities: one, they had to be adventurers, but they were also community

builders. So the people who came here to build the university also

intended to build the community, and that meant interacting with

businesses and helping create businesses.”

President Hennessy

believes that the entrepreneurial spirit is part of the university’s

foundation, and he attributes this freedom partly to California’s

relative lack of legacy industries or traditions that need to be

protected, so “people are willing to try things.” At Stanford more than

elsewhere, the university and business forge a borderless community in

which making money is considered virtuous and where participants profess

a sometimes inflated belief that their work is changing the world for

the better. Faculty members commonly invest in start-ups launched by

their students or colleagues. There are probably more faculty

millionaires at Stanford than at any other university in the world.

Hennessy earned six hundred and seventy-one thousand dollars in salary

from Stanford last year, but he has made far more as a board member of

and shareholder in Google and Cisco.

Very often, the wealth

created by Stanford’s faculty and students flows back to the school.

Hennessy is among the foremost fund-raisers in America. In his twelve

years as president, Stanford’s endowment has grown to nearly seventeen

billion dollars. In each of the past seven years, Stanford has raised

more money than any other American university.

Like other élite

schools, Stanford has become increasingly diverse. Caucasian students

are now a minority on campus; roughly sixty per cent of undergraduates,

and more than half of graduate students, are Asian, black, Hispanic,

Native American, or from overseas; seventeen per cent of Stanford’s

undergraduates are the first member of their family to attend college.

Half of Stanford’s undergraduates receive need-based financial aid: if

their annual family income is below a hundred thousand dollars, tuition

is free. “They are the locomotive kids, pulling their whole family

behind them,” Tobias Wolff, a novelist who has taught at Stanford for

nearly two decades, says.

But Stanford’s entrepreneurial culture

has also turned it into a place where many faculty and students have a

gold-rush mentality and where the distinction between faculty and

student may blur as, together, they seek both invention and fortune.

Corporate and government funding may warp research priorities. A quarter

of all undergraduates and more than fifty per cent of graduate students

are engineering majors. At Harvard, the figures are four and ten per

cent; at Yale, they’re five and eight per cent. Some ask whether

Stanford has struck the right balance between commerce and learning,

between the acquisition of skills to make it and intellectual discovery

for its own sake.

David Kennedy, a Pulitzer Prize-winning

historian who has taught at Stanford for more than forty years, credits

the university with helping needy students and spawning talent in

engineering and business, but he worries that many students uncritically

incorporate the excesses of Silicon Valley, and that there are not

nearly enough students devoted to the liberal arts and to the idea of

pure learning. “The entire Bay Area is enamored with these notions of

innovation, creativity, entrepreneurship, mega-success,” he says. “It’s

in the air we breathe out here. It’s an atmosphere that can be toxic to

the mission of the university as a place of refuge, contemplation, and

investigation for its own sake.”

In February,

2011, a dozen members of the Bay Area business community had dinner with

President Obama at the home of the venture capitalist John Doerr. Steve

Jobs, who was in the late stages of the illness that killed him, eight

months later, sat at a large rectangular table beside Obama; Mark

Zuckerberg, of Facebook, sat on the other side. They were flanked by

Silicon Valley corporate chiefs, from Google, Cisco, Oracle, Genentech,

Twitter, Netflix, and Yahoo. The only non-business leader invited was

Hennessy. His attendance was not a surprise. “John Hennessy is the

godfather of Silicon Valley,” Marc Andreessen, a venture capitalist, who

as an engineering student co-invented the first Internet browser, says.

Hennessy

is fifty-nine, six feet tall, and trim, with thinning gray hair and a

square jaw. He talks fast and loud, one thought colliding with the next;

he bubbles over with information and data points. His laugh is a sharp

cackle. Hennessy grew up in Huntington, Long Island. His father was an

aerospace engineer; his mother quit teaching to rear six children. As a

child, he read straight through the sixteen-volume encyclopedia his

parents gave him. He studied electrical engineering at Villanova

University and went on to earn a doctorate in computer science at Stony

Brook University. He married his high-school sweetheart, Andrea Berti,

and, in 1977, became an assistant professor of electrical engineering at

Stanford.

Hennessy’s academic work focussed on redesigning

computer architecture, primarily through streamlining software that

would make processors work more efficiently; the technology was called

Reduced Instruction Set Computer (

RISC). He co-wrote two

textbooks that are still considered to be seminal in computer-science

classes. He took a year’s sabbatical from Stanford in 1984 to co-found

MIPS Computer Systems. In 1992, it was sold to Silicon Graphics for three hundred and thirty-three million dollars.

MIPS

technology has contributed to the miniaturization of electronics,

making possible the chips that power everything from laptops and mobile

phones to refrigerators and automobile dashboards. “

RISC

was foundational,” Andreessen says. “It was one of the maybe five or six

things in the history of the industry that really matter.”

Hennessy

returned to teaching at Stanford, and became a full professor in 1986.

In 1996, he was elevated to dean of the School of Engineering. He had

little time to teach, but he marvelled at the inventions of his graduate

students. He describes the time, in the mid-nineties, when Jerry Yang

and David Filo took him to visit their campus trailer, which was

littered with pizza boxes and soda cans, to show off a directory of Web

sites called Yahoo. He calls this an “aha moment,” because he realized

that the Web was “going to change how everyone communicated.”

In

1998, Larry Page and Sergey Brin, who were graduate students, showed

Hennessy their work on search software that they later called Google. He

typed in the name Gerhard Casper, and instead of getting results for

Casper the Friendly Ghost, as he did on AltaVista, up popped links to

Gerhard Casper the president of Stanford. He was thrilled when members

of the engineering faculty mentored Page and Brin and later became

Google investors, consultants, and shareholders. Since Stanford owned

the rights to Google’s search technology, he was also thrilled when, in

2005, the stock grants that Stanford had received in exchange for

licensing the technology were sold for three hundred and thirty-six

million dollars.

In 1999, after Condoleezza Rice stepped down as

provost to become the chief foreign-policy adviser to the Republican

Presidential candidate George W. Bush, Casper offered Hennessy the

position of chief academic and financial officer of the university. Soon

afterward, Hennessy induced a former electrical-engineering faculty

colleague, James Clark, who had founded Silicon Graphics (which

purchased

MIPS), to give a hundred and fifty million

dollars to create the James H. Clark Center for medical and scientific

research. Less than a year later, Casper stepped down as president and

Hennessy replaced him.

Hennessy joined Cisco’s corporate board in

2002, and Google’s in 2004. It is not uncommon for a university

president to be on corporate boards. According to James Finkelstein, a

professor at George Mason University’s School of Public Policy, a third

of college presidents serve on the boards of one or more publicly traded

companies. Hennessy says that his outside board work has made him a

better president. “Both Google and Cisco face—and all companies in a

high-tech space face—a problem that’s very similar to the ones

universities face: how do they maintain a sense of innovation, of a

willingness to do the new thing?” he says.

But Gerhard Casper

worries that any president sitting on a board can pose a conflict of

interest. Stanford was one of the first universities to agree to allow

Google to digitize a third of its library—some three million books—at a

time when publishers and the Authors Guild were suing the company for

copyright infringement. Hennessy says that he did not participate in the

decision and “never saw the agreement.” But shouldn’t the president of a

university see an agreement that may violate copyright laws and that

represents a historic clash between the university and the publishing

industry? And shouldn’t he worry that those who made the decision might

be eager to reach an agreement that would please him?

Debra Satz,

the senior associate dean for Humanities and Arts at Stanford, who

teaches ethics and political philosophy, is troubled that Hennessy is

handcuffed by his industry ties. This subject has often been discussed

by faculty members, she says: “My view is that you can’t forbid the

activity. Good things come out of it. But it raises dangers.” Philippe

Buc, a historian and a former tenured member of the Stanford faculty,

says, “He should not be on the Google board. A leader doesn’t have to

express what he wants. The staff will be led to pro-Google actions

because it anticipates what he wants.”

Hennessy has also invested

in such venture-capital firms as Kleiner Perkins, Sequoia Capital, and

Foundation Capital—companies that have received investment funds from

the university’s endowment board, on which Hennessy sits. In 2007, an

article published in the

Wall Street Journal—“

THE GOLDEN TOUCH OF STANFORD’S PRESIDENT”—highlighted the cozy relationship between Hennessy and Silicon Valley firms. The

Journal

reported that during the previous five years he had earned forty-three

million dollars; a portion of that sum came from investments in firms

that also invest Stanford endowment monies. Hennessy flicks aside

criticism of those investments, noting that he isn’t actively involved

in managing the endowment and likening them to a mutual fund: “I’m a

limited partner. I couldn’t even tell you what most of these investments

were in.”

Perhaps because his position is so seemingly secure,

and his assets so considerable, Hennessy rarely appears defensive. He

knows that questions about conflicts of interest won’t define his

legacy, and they seem less pressing when Stanford is thriving.

Facebook’s purchase of Instagram made millions for, among others,

Sequoia Capital—which means that it made money for Hennessy and for

Stanford’s endowment, too.

Two decades ago, when the Stanford humor magazine, the Chaparral, did a spoof issue with the Harvard Lampoon, the Chappie,

as it’s called, rearranged Harvard’s logo—“Ve Ri Tas”—to “Ve Ry Tan.”

Sometimes the campus stars are athletes. This year, the quarterback

Andrew Luck is the likely No. 1 pick in the N.F.L. draft. He stayed

through his senior year to earn a degree in architectural design. “I sat

next to him in a class once,” Ishan Nath, a senior economics major,

says. “I didn’t talk to him, but I sneezed and he said, ‘Bless you.’ For

the next month, like, ‘I got blessed by Andy Luck!’ ” But, at Stanford,

star athletes don’t always have the status they do at other schools.

When Tiger Woods was a student, in the mid-nineties, not everyone knew

who he was. One classmate, Adam Seelig, now a poet and a theatre

director, spotted Woods practicing in his hallway one night and returned

to his own dorm to ask who “this total loser practicing putts at 11 P.M. on a Saturday night” was.

Thirty-four

thousand high-school seniors applied for Stanford’s current freshman

class, and only twenty-four hundred—seven per cent—were accepted. Part

of the appeal, undoubtedly, is the school’s laid-back vibe. There are

nearly as many bicycles on campus—thirteen thousand—as undergraduate and

graduate students. Flip-flops are worn year-round.

“To me, it

felt like a four-year camp,” Devin Banerjee, who graduated last year and

is now a reporter for Bloomberg News, says. “We had so many camp

counsellors. You never felt lost.” Michael Tubbs, a senior who was

brought up by a single mother and whose father has been in prison for

most of his son’s life, says that he could not have attended Stanford

without a full financial-aid scholarship. He is an honors student, and

marvels at how financial aid has produced a campus of diverse students

who are unburdened by student debt—and who thus don’t have to spend the

first five years of their career earning as much money as they can.

After he graduates, Tubbs plans to return home to Stockton, California,

to challenge an incumbent member of the city council this fall.

To

listen to students who are presumably in the most anxious, rebellious

period of their lives express such serenity is jarring. One afternoon, I

met with some undergraduates at the CoHo coffee-house. They almost

uniformly described an idyllic university life. Tenzin Seldon, a senior

and a comparative-studies major from India, was one of five Rhodes

Scholars chosen from Stanford this year. She said that Stanford is

particularly welcoming to foreign students. Ishan Nath, who also won a

Rhodes Scholarship, disagrees with those who say that Stanford is a

utilitarian university: “There are plenty of opportunities for learning

for the sake of learning here.” Kathleen Chaykowski, a junior, was a

premed and an engineering major who switched to English, and last year

was the editor-in-chief of the

Stanford Daily. She spoke about

the risk-taking that is integral to Silicon Valley. “My academic adviser

said, ‘I want you to have a messy career at Stanford. I want to see you

try things, to discover the parts of yourself that you didn’t know

existed.’ ”

Each year, Hennessy visits four to five freshman

dormitories to field questions. When he visited the Cedro dorm, on

January 30th, the forty or so students gathered around him in the

recreation room often asked the kinds of benign question posed to

celebrities on TV shows: Did he miss computer science? Or they sometimes

asked the questions of young careerists: To be successful, what should

we do?

The students’ calm, however, belies the stress that they

are under. “Looking around, most everyone looks incredibly productive,

seems surrounded by friends, and ultimately appears to be fundamentally

happy. This aura of good cheer is contagious,” the editorial board of the

Stanford Daily

wrote in early April, in an essay that described the Stanford duck

syndrome in detail. “Yet this contagious happiness has its dark side:

Feeling dejected or unhappy in a place like Stanford causes one to feel

abnormal and out-of-place, so we may tend to internalize and brood over

this lack of happiness instead of productively addressing the

situation.”

In late 2010, Mayor Michael

Bloomberg announced that New York wanted to replicate the success of

Silicon Valley in the city’s Silicon Alley, and he called for a public

competition among universities to build an élite graduate school of

engineering and applied sciences on city-owned land. Seven universities

submitted proposals for a campus on Roosevelt Island, and Stanford was

widely viewed as the early front-runner.

Stanford’s proposal

contained a cover letter from Hennessy that conveyed his sweeping

ambition:

“StanfordNYC has the potential to help catapult New York City

into a leadership position in technology, to enhance its entrepreneurial

endeavors and outcomes, diversify its economic base, enhance its talent

pool and help our nation maintain its global lead in science and

technology.” Stanford proposed spending an initial two hundred million

dollars to build a campus housing two hundred faculty and more than two

thousand graduate students. It pledged to raise $1.5 billion for the

campus.

This was not to be a satellite campus. It would be solely

an engineering and applied-science school. Hennessy proposed that each

department base three-quarters of its faculty in Palo Alto and a quarter

on Roosevelt Island. Nor was it to be solely a research facility.

(Stanford has one at Peking University, in Beijing.) Faculty members

across the country would share videoconference screens, and students in

New York would be able to take online classes based in Palo Alto.

Stanford’s chief fund-raiser, Martin Shell, who is the vice-president

for development, says, “New York City could be the place we could begin

to put into place a truly second campus. One hundred years from now, we

could be a global university.”

Not everyone on Stanford’s campus

shared Hennessy’s enthusiasm. Members of the humanities faculty were

upset that Stanford proposed to create a second campus without including

liberal-arts faculty or students. Casper, the former Stanford

president, asked whether the Roosevelt Island project would “reinforce

the cliché that we are science and engineering and biology driven and

the arts and humanities are stepchildren.” According to Jeffrey Koseff,

the director of Stanford’s Woods Institute for the Environment, there

were “mixed feelings,” because of fears that resources would be drained

from the Palo Alto campus. And there were additional questions: Would

Stanford be able to recruit top faculty and students to New York when

the technological heart of the country was in Silicon Valley? Could

Stanford really reproduce in New York its “secret sauce,” a phrase that

university officials use almost mystically to describe whatever it is

that makes the school succeed as an entrepreneurial incubator?

Exactly

what that sauce is provokes much speculation, but an essential

ingredient is the attitude on campus that business is a partner to be

embraced, not kept at arm’s length. The Stanford benefactor and former

board chairman Burton McMurtry says, “When I first came here, the

faculty did not look down its nose at industry, like most faculties.”

Stanford’s proposal to New York, almost as a refrain, repeatedly

referred to the “close ties between the industry and the university.”

People

may remember Hennessy’s reign most for the expansion of Stanford into

Silicon Valley. But his principal academic legacy may be the growth of

what’s called “interdisciplinary education.” This is the philosophy now

promoted at the various schools at Stanford—engineering, business,

medicine, science, design—which encourages students from diverse majors

to come together to solve real or abstract problems. The goal is to have

them become what are called “T-shaped” students, who have depth in a

particular field of study but also breadth across multiple disciplines.

Stanford hopes that the students can also develop the social skills to

collaborate with people outside their areas of expertise. “Ten years

ago, ‘interdisciplinary’ was a code word for something soft,” Jeff

Koseff says. “John changed that.”

Among the bolder initiatives to

create T-students is the Institute of Design at Stanford, or the

d.school, which was founded seven years ago and is housed in the

school of engineering.

Its founder and director is David Kelley, who, with a thick black

mustache and black-framed eyeglasses, looks like Groucho Marx, without

the cigar. His mission, he says, is to instill “empathy” in his

students, to encourage them to see the human side of the challenges

posed in class, and to provoke them to be creative. Stanford is not the

only university to adopt this approach to learning—M.I.T., among others,

does, too. But Kelley’s effort is widely believed to be the most

audacious. His classes stress collaboration across disciplines and

revolve around projects to advance social progress. The school

concentrates on four areas: the developing world; sustainability; health

and wellness; and K-12 education. The d.school space is open, with

sliding doors and ubiquitous whiteboards and tables too small to

accommodate laptops; Kelley doesn’t want students retreating into their

in-boxes. There are very few lectures at the school, and students are

graded, in part, on their collaborative skills and on evaluations by

fellow-students.

Sarah Stein Greenberg, who is the managing

director of the d.school, was a student and then a fellow. Her 2006

class project was to figure out an inexpensive way for farmers in Burma

to extract water from the ground for irrigation. Greenberg and her team

of students travelled to Burma, and devised a cheap and efficient

treadle pump that looks like a Stairmaster, which the farmer steps on in

order to extract water. A local nonprofit partner manufactured and sold

twenty thousand pumps, costing thirty-seven dollars each. In his

unpretentious, book-filled office, John Hennessy displays items that

have been produced, at least in part, by Stanford students to assist

developing countries, including a baby warmer for premature babies; the

simple device’s cost is one per cent of an incubator’s.

In late

January, a popular d.school class, Entrepreneurial Design for Extreme

Affordability, taught by James M. Patell, a business-school professor,

consisted of thirty-seven graduate and three undergraduate students from

thirteen departments, including engineering, political science,

business, medicine, biology, and education. It was early in the quarter,

and Patell offered the students a choice of initial projects. One was

to create a monitoring system to help the police locate lost children.

Another was to design a bicycle-storage system.

David Janka, a

teaching fellow, who walked about the class’s vast open space wearing

tapered khakis and shoes without socks, invited the students to gather

in groups around the white wooden tables to discuss how to address these

challenges. Patell and Janka were joined by David Beach, a professor of

mechanical engineering; Julian Gorodsky, a practicing therapist and the

“team shrink” at the d.school; and Stuart Coulson, a retired venture

capitalist who volunteers at the university up to fifty hours per week.

“The kinds of project we put in front of our students don’t have right

and wrong answers,” Greenberg says. “They have good, better, and really,

really better.”

Justin Ferrell, who was attending Stanford on a

one-year fellowship, on leave from his job as the digital-design

director at the Washington

Post, said that he was impressed by

“the bias toward action” at the d.school. Newspapers have bureaucracy,

committees, hierarchies, and few engineers, he said. At the

Post, “diversity” was defined by ethnicity and race. At the d.school, diversity is defined by majors—by people who think different.

Multidisciplinary

courses at Stanford worked for two earlier graduates, Kevin Systrom and

Mike Krieger, the founders of Instagram. In 2005 and 2007,

respectively, Systrom and Krieger were awarded Mayfield fellowships.

(Only a dozen upperclassmen are chosen each year.) In an intense

nine-month work-study program, fellows immerse themselves in the

theoretical study of entrepreneurship, innovation, and leadership, and

work during the summer in a Valley start-up. Tom Byers, an engineering

professor, founded the program in 1996, and says that it aims to impart

to fellows this message: “Anything is possible.” Byers has kept in touch

with Systrom and Krieger and remembers them as “quiet and quite

humble,” by which he means that they were outstanding human beings who

could get others to follow them. They were, in short, T-students.

The

most articulate critic of the way the university functions might be the

man who used to run it. Gerhard Casper, who is a senior fellow at

Stanford, is full of praise for Hennessy, and the two men clearly like

each other. Nonetheless, it wasn’t hard to find a few daggers in a

speech that Casper gave in May, 2010, in Jerusalem. The United States

has “two types of college education that are in conflict with each

other,” he said. One is “the classic liberal-arts model—four years of

relative tranquility in which students are free to roam through

disciplines, great thoughts, and great works with endless options and

not much of a rationale.” The second is more utilitarian: “A college

degree is expected to lead to a job, or at least to admission to a

graduate or professional school.” The best colleges divide the first two

years into introductory courses and the last two into the study of a

major, all the while trying to expose students to “a broad range of

disciplines and modes of thought.” Students, he declared, are not

broadly educated, not sufficiently challenged to “search to know.”

Instead, universities ask them to serve “the public, to work directly on

solutions in a multidisciplinary way.” The danger, he went on, is “that

academic researchers will not only embrace particular solutions but

will fight for them in the political arena.” A university should keep to

“its most fundamental purpose,” which is “the disinterested pursuit of

truth.” Casper said that he worried that universities would be diverted

from basic research by the lure of new development monies from “the

marketplace,” and that they would shift to “ever greater emphasis on

direct usefulness,” which might mean “even less funding of and attention

to the arts and humanities.”

When I visited Casper in his office

on campus this winter, I asked him if his critique applied to Stanford.

“I am a little concerned that Stanford, along with its peers, is now

justifying its existence mostly in terms of what it can do for humanity

and improve the world,” he answered. “I am concerned that a

research-intense university will become too result-oriented,” a

development that risks politicizing the university. And it also risks

draining more resources from liberal arts at a time when “most

undergraduates at most universities are there not because they really

want to get a broad education but because they want to get the

wherewithal for a good job.”

John Hennessy is familiar with

Casper’s Jerusalem speech. “It applies to everyone—us, too,” he says.

Getting into college is very competitive, tuition is very expensive,

and, with economic uncertainty, students become preoccupied with

majoring in subjects that may lead to jobs. “That’s why so many students

are majoring in business,” Hennessy says, and why so few are humanities

majors. He shares the concern that too many students are too

preoccupied with getting rich. “It’s true broadly, not just here,” he

says.

Miles Unterreiner, a senior, fretted in the

Stanford Daily that

students spent too much time networking and strategizing and becoming

“slaves to the dictates of a hoped-for future,” and too little time

being spontaneous. “Stanford students are superb consequentialists—that

is, we tend to measure the goodness of actions by their eventual

results,” he wrote. “Bentham and Mill would be proud. We excel at making

rational calculations of expected returns to labor and investment,

which is probably why so many of us will take the exhortation to occupy

Wall Street quite literally after graduation. So before making any

decision, we ask one, very simple question: What will I get out of it?”

“At

most great universities, humanities feel like stepchildren,” Casper

told me. Two members of the humanities faculty—David Kennedy and Tobias

Wolff, a three-time winner of the O. Henry Award for his short

stories—extoll Stanford’s English and history departments but worry that

the university has acquired a reputation as a place for people more

interested in careers or targeted education than in a lofty “search for

truth.”

Attempting to address this weakness, Stanford released,

in January, a study of its undergraduate education. The report promoted

the T-student model embraced by Hennessy. The original Stanford “object”

of creating “usefulness in life,” though affirmed, was said to be

insufficient. “We want our students not simply to succeed but to

flourish; we want them to live not only usefully but also creatively,

responsibly, and reflectively.” The report was harsh:

The

long-term value of an education is to be found not merely in the

accumulation of knowledge or skills but in the capacity to forge fresh

connections between them, to integrate different elements from one’s

education and experience and bring them to bear on new challenges and

problems. . . . Yet we were struck by how little attention most

departments and programs have given to cultivating this essential

capacity. We were also surprised, and somewhat chagrined, to discover

how infrequently some of our students exercise it. For all their

extraordinary energy and range, many of the students we encountered lead

curiously compartmentalized lives, with little integration between the

different spheres of their experience.

Like

any president of a large university, John Hennessy is subject to a

relentless schedule of breakfasts, meetings, lunches, speeches,

ceremonies, handshakes, dinners, and late-night calls alerting him to an

injury or a fatality on campus. His home becomes a public space for

meetings and entertaining. He juggles various constituencies—faculty,

administrators, students, alumni, trustees, athletics. The routine

becomes a daily blur, compelling a president to want to break away and

seek a larger vision, something that becomes his stamp, his legacy. For a

while, it seemed that StanfordNYC might provide that legacy.

Hennessy

declared that a New York campus was “a landmark decision.” He invested

enormous time and effort to overcome faculty, alumni, trustee, and

student unease about diverting campus resources for such a grandiose

project. “I was originally a skeptic,” Otis Reid, a senior economics

major, says. But Hennessy persuaded him, by arguing that Stanford’s

future will be one of expansion, and Reid agreed that New York was a

better place to go first than Abu Dhabi.

On December 16, 2011,

Stanford announced that it was withdrawing its bid. Publicly, the

university was vague about the decision, and, in a statement, Hennessy

praised “the mayor’s bold vision.” But he was seething. In January, he

told me that the city had changed the terms of the proposed deal. After

seven universities had submitted their bids, he said, the city suddenly

wanted Stanford to agree that the campus would be operational, with a

full complement of faculty, sooner than Stanford thought was feasible.

The city, according to Debra Zumwalt, Stanford’s general counsel and

lead negotiator, added “many millions of dollars in penalties that were

not in the original proposal, including penalizing Stanford for failure

to obtain approvals on a certain schedule, even if the delays were the

fault of the city and not Stanford. . . . I have been a lawyer for over

thirty years, and I have never seen negotiations that were handled so

poorly by a reputable party.” One demand that particularly infuriated

Stanford was a fine of twenty million dollars if the City Council, not

Stanford, delayed approval of the project. These demands came from city

lawyers, not from the Mayor or from a deputy mayor, Robert Steel, who

did not participate in the final round of negotiations with Stanford

officials. However, city negotiators were undoubtedly aware that Mayor

Bloomberg, in a speech at M.I.T., in November, had said of two of the

applicants, “Stanford is desperate to do it. Cornell is desperate to do

it. . . . We can go back and try to renegotiate with each” university.

Out of the blue, Hennessy says, the city introduced the new demands.

To

Hennessy, these demands illustrated a shocking difference between the

cultures of Silicon Valley and of the city. “I’ve cut billion-dollar

deals in the Valley with a handshake,” Hennessy says. “It was a very

different approach”—and, he says, the city was acting “not exactly like a

partner.”

Yet the decision seemed hasty. Why would Hennessy, who

had made such an effort to persuade the university community to embrace

StanfordNYC, not pause to call a business-friendly mayor to try to get

the city to roll back what he saw as its new demands? Hennessy says that

his sense of trust was fundamentally shaken. City officials say they

were surprised by the sudden pullout, especially since Hennessy had an

agreeable conversation with Deputy Mayor Steel earlier that same week.

Steel

insists that “the goalposts were fixed.” All the stipulations that

Stanford now complains about, he says, were part of the city’s original

package. Actually, they weren’t. In the city’s proposal request, the due

dates and penalties were left blank. Seth Pinsky, the president of the

New York City Economic Development Corporation, who was one of the

city’s lead negotiators, says that these were to be filled in by each

bidder and then discussed in negotiations. “The more aggressive they

were on the schedule and the more aggressive they were on the amount,

the more favorably” the city looked at the bid, Pinsky told me. In the

negotiations, he said, he tried to get each bidder to boost its offer by

alerting it of more favorable competing bids. At one point, Stanford

asked about an ambiguous clause in the city’s proposal request: would

the university have to indemnify the city if it were sued for, say,

polluted water on Roosevelt Island? The city responded that the

university would. According to Pinsky, city lawyers said that this was

“not likely to produce significant problems,” and that other bidders did

not object. To Pinsky and the city, these demands—and the

twenty-million-dollar penalty if the City Council’s approval was

delayed—were “not uncommon,” since developers often “take liability for

public approvals.” To Stanford, the stipulations made it seem as if the

goal posts were not fixed.

Three days after Stanford withdrew,

the city awarded the contract to Cornell University and its junior

partner, the Technion-Israel Institute of Technology, the oldest

university in Israel. Not a few Hennessy and Stanford partisans were

pleased. “I am very relieved,” Gerhard Casper said.

Jeff Koseff,

who played golf with Hennessy within a few days of Stanford’s

withdrawal, recalls, “He was already talking about what we could do

next.” One venture that Hennessy was exploring, though there is as yet

no concrete plan, is working with the City College of New York to

establish a Stanford beachhead in Manhattan. Deputy Mayor Steel says,

“I’d be ecstatic.” Still, a Stanford official is dubious: “John’s

disillusionment with the city is pretty thorough.”

Another

person who is pleased with the withdrawal is Marc Andreessen, whose

wife teaches philanthropy at Stanford and whose father-in-law, John

Arrillaga, is one of the university’s foremost donors. Instead of

erecting buildings, Andreessen says, Stanford should invest even more of

its resources in distance learning: “We’re on the cusp of an

opportunity to deliver a state-of-the-art, Stanford-calibre education to

every single kid around the world. And the idea that we were going to

build a physical campus to reach a tiny fraction of those kids was, to

me, tragically undershooting our potential.”

Hennessy, like

Andreessen, believes that online learning can be as revolutionary to

education as digital downloads were to the music business. Distance

learning threatens one day to disrupt higher education by reducing the

cost of college and by offering the convenience of a stay-at-home,

do-it-on-your-own-time education. “Part of our challenge is that right

now we have more questions than we have answers,” Hennessy says, of

online education. “We know this is going to be important and, in the

long term, transformative to education. We don’t really understand how

yet.”

This past fall, Stanford introduced three free online

engineering lectures, each organized into short segments. A hundred and

sixty thousand students in a hundred and ninety countries signed up for

Sebastian Thrun’s online Introduction to Artificial Intelligence class.

They listened to the same material that Stanford students did and were

given pass/fail grades; at the end, they received certificates of

completion, which had Thrun’s name on them but not Stanford’s. The

interest “surprised us,” John Etchemendy, the provost, says, noting that

Stanford was about to introduce several more classes, which would also

be free. The “key question,” he says, is: “How can we increase

efficiency without decreasing quality?”

Stanford faculty members,

accustomed to the entrepreneurial culture, have already begun to clamor

for a piece of the potential revenue whenever the university starts to

charge for the classes. This quest offends faculty members like Debra

Satz, the senior associate dean, who regards herself as a public

servant. “Some of the faculty see themselves as private contractors,

and, if you are, you expect to get paid extra,” she says. “But, if

you’re a member of a community, then you have certain responsibilities.”

Sebastian Thrun quit his faculty position at Stanford; he now

works full time at Udacity, a start-up he co-founded that offers online

courses. Udacity joins a host of companies whose distance-learning

investments might one day siphon students from Stanford—Apple, the News

Corp’s Worldwide Learning, the Washington

Post’s Kaplan University, the New York

Times’

Knowledge Network, and the nonprofit Khan Academy, with its

approximately three thousand free lectures and tutorials made available

on YouTube and funded by donations from, among others, the Bill &

Melinda Gates Foundation, Google, and Ann and John Doerr.

Since so

much of an undergraduate education consists of living on campus and

interacting with other students, for those who can afford it—or who

benefit from the generous scholarships offered by such institutions as

Stanford—it’s difficult to imagine that an online education is

comparable. Nor can an online education duplicate the collaborative,

multidisciplinary classes at Stanford’s d.school, or the personal

contact with professors that graduate students have as they inch toward a

Ph.D.

John Hennessy’s experience in Silicon Valley proves that

digital disruption is normal, and even desirable. It is commonly

believed that traditional companies and services get disrupted because

they are inefficient and costly. The publishing industry has suffered in

recent years, the argument goes, because reading on screens is more

convenient. Why wait in line at a store when there’s Amazon? Why pay for

a travel agent when there’s Expedia? The same argument can be applied

to online education. An online syllabus could reach many more students,

and reduce tuition charges and eliminate room and board. Students in an

online university could take any course whenever they wanted, and

wouldn’t have to waste time bicycling to class.

But online

education might also disrupt everything that distinguishes Stanford.

Could a student on a video prompter have coffee with a venture

capitalist? Could one become a T-student through Web chat? Stanford has

been aligned with Silicon Valley and its culture of disruption. Now

Hennessy and Stanford have to seriously contemplate whether more

efficiency is synonymous with a better education.

In mid-February,

Hennessy embarked on a sabbatical that will take him away from campus

through much of the spring. His plans included travelling and spending

time with his family. The respite, Hennessy says, will provide an

opportunity to think. Of all the things he plans to think hard about, he

says, distance learning tops the list. Stanford, like newspapers and

music companies and much of traditional media a little more than a

decade ago, is sailing in seemingly placid waters. But Hennessy’s

digital experience alerts him to danger. He says, “There’s a tsunami

coming.”

♦

Yes, it's barely two years since Facebook made it possible to slap the

Like button onto content on external websites, which in turn has

expedited communication about everything from news stories to videos to

photos to fundraising appeals, making Facebook the leading referrer of

traffic to many content sites, as well as probably being responsible for

helping get innumerable Kickstarter campaigns funded.

Yes, it's barely two years since Facebook made it possible to slap the

Like button onto content on external websites, which in turn has

expedited communication about everything from news stories to videos to

photos to fundraising appeals, making Facebook the leading referrer of

traffic to many content sites, as well as probably being responsible for

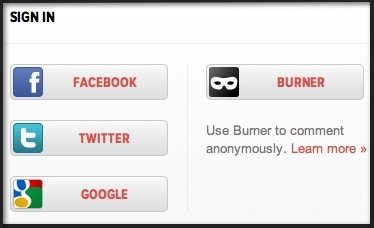

helping get innumerable Kickstarter campaigns funded. And yet, today, we take this system (which has been adopted by others, like Twitter and Google)

for granted. And maybe even get a little cranky when we have to set up

independent log-in credentials at sites that don't integrate with

Facebook. And this system (Facebook Connect) hasn't just made our lives

more convenient, it's helped accelerate a whole new industry of apps and

websites that have been able to get up and running faster, because they

haven't had to build their own identity management systems but instead

were able to just plug in to Facebook's (the same way they get up and

running faster because they can use Amazon Web Services rather than

building out their own server infrastructure).

And yet, today, we take this system (which has been adopted by others, like Twitter and Google)

for granted. And maybe even get a little cranky when we have to set up

independent log-in credentials at sites that don't integrate with

Facebook. And this system (Facebook Connect) hasn't just made our lives

more convenient, it's helped accelerate a whole new industry of apps and

websites that have been able to get up and running faster, because they

haven't had to build their own identity management systems but instead

were able to just plug in to Facebook's (the same way they get up and

running faster because they can use Amazon Web Services rather than

building out their own server infrastructure). More

often than not, they'll send you straight to their Facebook page. The

social network has created powerful tools for brands to build excitement

(and evangelism) among consumers, and companies are choosing to use

those pages as their primary home on the web. Even GM, which provoked a

stir earlier this week when it was reported the automaker was killing

its $10 million Facebook advertising budget, said it would nevertheless

continue to invest in its brand pages--to the tune of $30 million, no

less--because, the company said, "it continues to be a very effective

tool for engaging with our customers."

More

often than not, they'll send you straight to their Facebook page. The

social network has created powerful tools for brands to build excitement

(and evangelism) among consumers, and companies are choosing to use

those pages as their primary home on the web. Even GM, which provoked a

stir earlier this week when it was reported the automaker was killing

its $10 million Facebook advertising budget, said it would nevertheless

continue to invest in its brand pages--to the tune of $30 million, no

less--because, the company said, "it continues to be a very effective

tool for engaging with our customers."